High performance computing (HPC) & AI has become a critical part of our modern world performing complex simulations and calculations that are essential to scientific research, engineering, security and other fields. HPC & AI have become necessary to help solve some of the world’s toughest challenges like global climate modelling, cancer and infectious disease research; finding solutions for large socio-economic challenges. As the scale and complexity of the problems continue to grow, the performance requirement for computation rises as well.

Modern microprocessors have achieved amazing performance improvements since their inception, thanks to Moore’s Law—the regular increase in the transistor count on a chip by a factor of two every two years as fabrication technology enables smaller and smaller transistors. What is less widely recognized is that this exponential growth in processing power has been matched by similar enhancements in energy efficiency and processor speed. A 2010 study showed that the amount of computation that could be performed per unit of energy increased by about 50% every year and a half, known as Koomey’s Law.1

As the demand for HPC & AI continues to grow, often in supercomputers and large data centers, so do concerns about the environmental impact of these systems. Lately, there has been an increasing attention on data center sustainability because of its implications on total cost of ownership and climate issues. Data huge challenge in regards to their energy use as they scale up to exascale class and beyond. As the demand for high-performance compute keeps growing, we are encountering a situation where energy use is a limiting factor. This energy use not only affects the environment but also the profitability of data center operators as the industry requires more computational performance. Therefore, massive performance-per-watt gains are required as the industry advances to the next milestone.

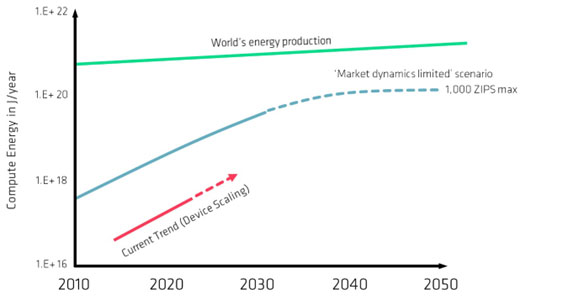

The decadal plan for semiconductors published by Semiconductor Research Corporation highlights this growing demand for compute energy vs worlds energy production in the below chart. The International Energy Agency (IEA) referred to energy efficiency as “the world’s first fuel.” Similarly, the Alliance to Save Energy stated that “energy efficiency is one of the most important tools for avoiding climate change by reducing use of fossil fuels.” While power efficient data center alone cannot fully address climate change, it is an important part of the solution.

Source: Decadal Plan for Semiconductors – SRC ; ZIPS = 1021 compute instructions per second

Now lets talk about the technologies that can address these performance and energy efficiency challenges

Heterogenous compute architectures

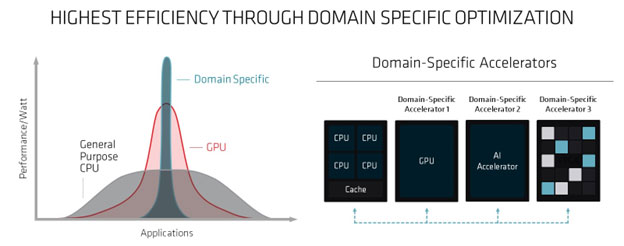

As computational demand grows driven by HPC and AI, new and a broad portfolio of domain specific compute architectures are necessary to not only right size the problems to the compute hardware but to also maximize the form, fit and function resulting in the most optimal solution that consumes the least energy. At AMD, we are focused on these new architectures that range from dedicated AI accelerators, FPGAs, GPUs and networking solutions to complement the traditional x86 architectures that are the backbone for computation today. The potential for these heterogenous architectures are best realized when they are tightly integrated by hardware and software to offload different parts of a computational workload to the right compute engine.

Intelligent Packaging Technologies

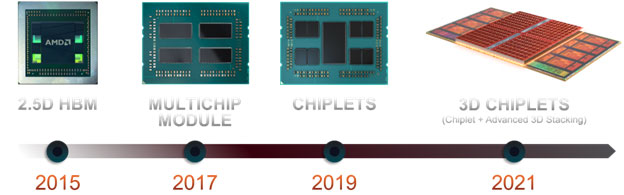

As compute architecture evolves to support heterogeneity, packaging solutions must evolve concurrently to reduce the complexity of integrating multiple technologies into a data center. The innovation in packaging from 3D stacking to chiplet architecture has enabled the growth of computational performance to meet the demand and efficiency for the Exascale class of performance. These packaging technologies enable AMD to integrate the different heterogenous compute engines in the form of chiplets to make efficient products to right size for the problem space adding to increased power efficiency. These range from APUs for HPC that integrate CPU and GPUs together to dedicated AI accelerators that can couple CPUs with custom AI engines. They also allow intelligent and dynamic power management between the different chiplets where the right compute engines are powered on or set to idle state depending on the workloads.

Communication Technology

Communication is key when it comes to processing large amounts of data when developing high performance computers. Globally, data transmission networks consume about 1% – 1.4% of global electricity usage according to iea.org analysis. This is extremely critical at both the macro and micro levels in large datacenter infrastructures. The ability to move data efficiently between the processor and the outside world is also critical to system performance and efficiency as you scale up and out. Bringing silicon chips, cache, memory closer together physically and electrically enables dramatic reductions in the energy of communication at a chip level while at the same time provides higher throughput potential. As the volume of data continue to grow driven by massive HPC simulations and Large Language Models in AI, improving communication efficiency is taking on higher importance in the journey for datacenter energy efficiency.

CONCLUSION

The energy consumption incurred by the growing needs for HPC and AI is not offset by Moore’s Law efficiency gains. The drive to Zetascale of computational performance efficiently requires innovative thinking around heterogeneous architecture, advanced packaging and communication technologies that combine to provide the most performant and efficiency compute infrastructure capable of handling the needs of future HPC and AI use cases. HPC has driven a lot of innovation in technology over the last several decades and these innovations need to continue to gather momentum in the era of AI to meet the latest computational demand in the most energy efficient way as possible.